How did we collect data from over 50 Clubs around the country? Tips for Managing Multisite Evaluation Logistics

Written By: Haley Umans

Project Team: Dr. Tiffany Berry (PI) and Dr. Michelle Sloper (PM), Dr. Lisa Teachanarong Aragon, Dr. Kathleen Doll, Haley Umans MA

Evaluation: Boys & Girls Clubs of America SMART Girls Outcome Evaluation

The evaluation was made possible by the Kimberly-Clark Foundation

In 2018, our YDEval team’s logistical prowess was put to the test as we planned and executed a national evaluation of an afterschool program, collecting quantitative and qualitative data from over 50 locations around the country. To ensure this evaluation was a success, we had to be intentional and strategic about working with staff to ensure that high-quality, complete data was being collection without adding immense burden on the hard-working site-level staff. The purpose of this blog is to share the successful strategies we employed to carry out this complex evaluation, ensuring our sample size was large enough to be representative of the participants and power our analyses, all the while, relying on site-based staff to carry out the substantial data collection efforts.

We worked with the Boys & Girls Clubs of America (BGCA) to evaluate their national SMART Girls program over 18 months.

About the Program: SMART Girls provides afterschool enrichment for girls ages 8 to 18 in a 10-lesson curriculum at BGCA Clubs around the country. The curriculum is tailored to three groups: (1) 8 – 10-year-old girls, (2) 11 – 13-year-old girls, and (3) 14 – 18-year-old girls.

Purpose of the Evaluation: The main evaluation goals were to: (1) explore the quality of implementation of the program across diverse BGCA clubs, (2) assess the effectiveness of the SMART Girls program in producing outcomes, and (3) determine best practices and a replication strategy to inform future implementation.

Evaluation Design: To do this, the YDEval team conducted a mixed-methods evaluation, employing a comparison group, pre-post surveys, qualitative data collection (i.e., focus groups, observations, and interviews), and tracking of implementation (i.e., implementation logs and attendance data). Case studies of SMART Girls Clubs were used to collect rich data about staff and implementation practices.

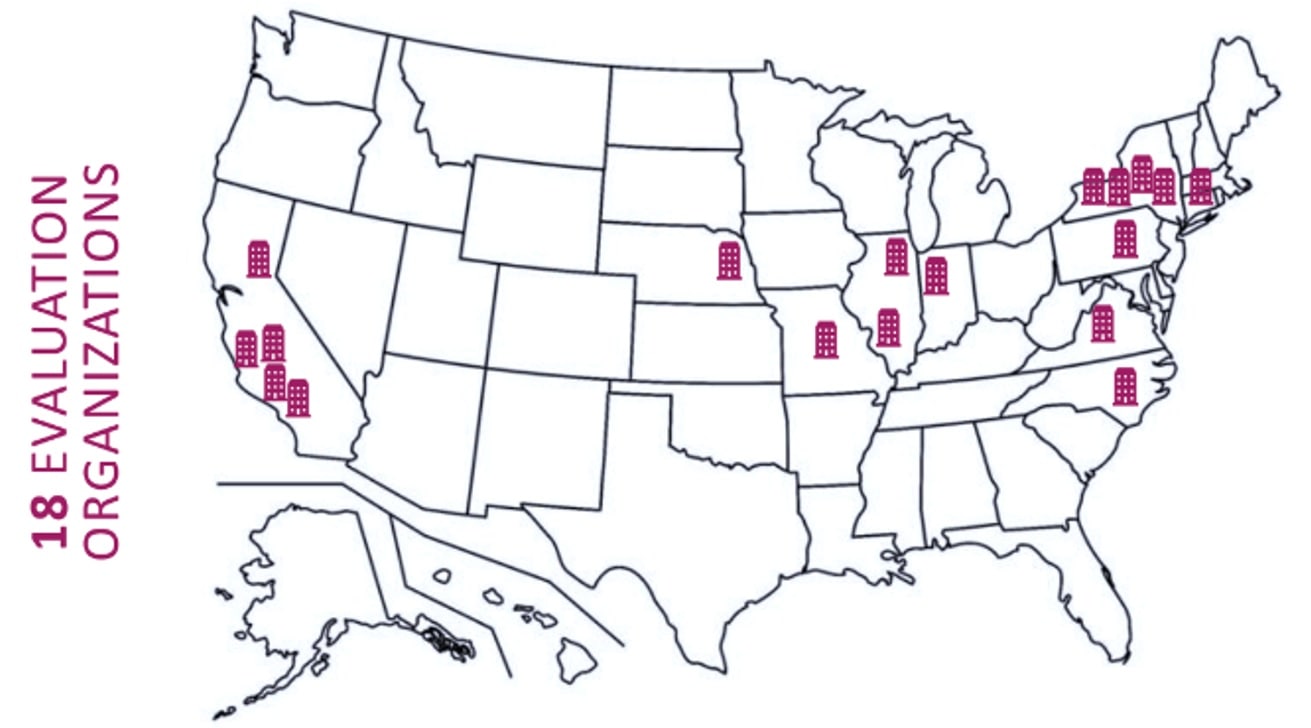

- Study Sample: The study involved 18 regional BGC Organizations around the United States that selected three Clubs each to participate in our study: two Clubs that would implement SMART Girls and one site that would not implement SMART girls for a total of 54 individual Clubs.

- Treatment vs. Comparison:

- Treatment = Youth from BGC Clubs who participated in SMART Girls

- Comparison = Youth from BGC Clubs who do not participate in SMART Girls

- Pre-Post Surveys: Outcome surveys were administered to all youth in the study. Survey timing aligned to SMART Girls implementation: before the program and at the end of the program (approximately 16 weeks).

- Case Studies: Additional data collection at selected Clubs included program observations, focus groups with participants, interviews with staff, attendance records, and implementation logs. The YDEval team visited each case study Club.

Planning for Data Collection

Given the large scope of this evaluation, it was imperative that the Clubs involved in the evaluation study were interested in the study and committed to the additional work that accompanied participation. To ensure we had buy-in before data collection begun, we engaged in the strategic selection of Clubs and intentional efforts to build relationships with the staff partners with whom we would coordinate study efforts.

Strategies to Recruit & Select Clubs

- Introductory Webinar: To reach Clubs and Organizations who may be interested in participating in the evaluation, Organization leaders from across the BGCA network were invited to attend an online webinar. The YDEval team facilitated the webinar to introduce the evaluation, discuss how Clubs would be involved, outline the responsibilities of the Clubs and the YDEval team, and identify some potential benefits of study participation. This webinar allowed for us to communicate clearly about the expectations of the evaluation and bolster enthusiasm for study participation.

- Incentives: Clubs were offered incentives to participate, which were provided by BGCA. These incentives included SMART Girls’ swag and apparel (i.e., branded kits with promotional materials), tailored site-specific evaluation finding reports, and financial incentives in pass-through funding (funding was split among the 3 Clubs in each BGC Organization). To ensure ongoing participation, the funding was dispersed at two time points – when a site agreed to participate and when the evaluation was complete.

- Survey of Interest: After the introductory webinar, Clubs were asked to complete an online survey providing their information and indicating their interest in the evaluation. This survey asked questions about the context of the Club (e.g., location, history implementing SMART Girls). This information was essential for the selection of Club Clubs for participation because it allowed the YDEval team to create priority lists of Clubs were matched the study selection criteria (e.g., ADD) and provided a diverse sample of Clubs.

- Letter of Agreement: Once Clubs were chosen to participate based on our inclusion criteria, leadership at the Clubs were asked to complete a letter of agreement (LOA), similar to a contract, but less restrictive. The letter described the requirements the Club needed to meet in order to fulfill the study requirements and receive the incentives. This solidified the partnerships between the sties and the YDEval team and provided a useful reference for roles and responsibilities.

Strategies to Build Relationships

- Identify a Point Person and Divide up Contacts: Upon selection of a regional Organization and their three accompanying Clubs, a point person was identified from each Organization to serve as the main point of contact for the YDEval team and to coordinate data collection efforts at their Clubs. Each member of the YDEval team was responsible for working with 4-5-point people from participating Organizations (accounting for 12 – 15 Clubs each), which offered a more manageable amount of work for each team member. Furthermore, this allowed the YDEval team to build positive relationships with Clubs because BGC leaders had a consistent point of contact in the YDEval team to communicate with throughout the study. YDEval team members were responsible for tracking the participation of each of their assigned Organizations.

- Clear and Consistent Communication: The YDEval team created a number of communication materials to ensure that the study details were clear and consistent. Although initial communication was most important for Club leader buy-in, we continued these strategies throughout the project when we needed to communicate complex and critical details. To do so, we employed two core strategies:

- Email templates – One member of the YDEval team would create an email template that remaining team members could edit and refine for individual contacts. This ensured we were sending clear emails with sufficient detail and that those details were consistent across all Clubs.

- Handouts – The YDEval team also created 2-3-page visual handouts that were emailed to Clubs when necessary. The handouts aimed to summarize the tasks associated with the evaluation to reinforce and remind the Clubs of their role in the evaluation. These materials were also tailored to the audience with unique versions going to different members of the study team (e.g., Org Leader, Clubs Leaders)

- Kick-Off Phone Calls: Each regional Organization participated in a kick-off phone call with their assigned member of the YDEval team. This created a one-on-one opportunity for the YDEval team to connect with the Clubs, explain the logistics of the evaluation, answer any questions. Additionally, we could ask BGC leaders to tell us how we could tailor the evaluation study to ensure the tasks didn’t add undue burden to their work.

- Ongoing Adaptability: We approached our work as a partnership; therefore, it was important to ask Clubs if our planned processes would work for their Organization and Club and incorporate any feedback into our evaluation processes. The ongoing collection of input and the flexibility to adapt our processes (to the extent possible) was necessary to ensure we were responsive to the Clubs were our data would be collected. Typically, the changes we were asked to make were simple fixes but made a big difference for the site (e.g., translating documents, sending surveys at a specific time, or including a specific person on our email correspondence).

Strategies Used During Data Collection

During data collection, we employed several valuable strategies to track data collection efforts, travel to 18 Clubs across the country, and minimize the burden on those involved in our study.

- Tracking Spreadsheet: The YDEval team created a complex tracking spreadsheet using Google Sheets to note progress throughout the evaluation (e.g., marking when the organization signs letter of agreement, kick-off call occurred, surveys mailed to Clubs). This tracking system was accessed by each member of the YDEval team to update information about their site and track important milestones in the evaluation process that might require reminder or follow-up.

- Travel Smart for Site Visits: The evaluation required that the YDEval team travel to 18 Clubs across the United States that were implementing the SMART Girls program to conduct our more intensive data collection case studies. These case study Clubs participated in in-person observations, focus groups with youth participants, and interviews with staff. To minimize costs, we planned to visit multiple Clubs in single trip (i.e., one flight to the east coast would be used for 3-4 site visits). Each YDEval team member visited a region of Clubs, making the most of the cost and time associated with each trip. Typically, YDEval team members visited Clubs that were assigned to them as a point of contact because these were the Clubs with whom they were collaborating throughout the project.

- Online Survey Strategies: The Interest Survey was the only online survey administered by the YDEval team to identify and recruit Clubs and Organizations for participation in the evaluation. We employed a number of strategies to encourage responses:

- Distribution: BGCA leadership distributed the surveys via email so the message would come from a familiar email address instead of an unfamiliar member of the YDEval team

- Incentives: Participants were entered into a raffle to win one of ten $50 amazon gift cards if they completed the survey.

- Formatting: The email with the survey was formatted with SMART Girls’ and BGCA’s logos to ensure that the email appeared credible to recipients.

- Reminders: Multiple reminder emails were sent to encourage staff to complete the survey.

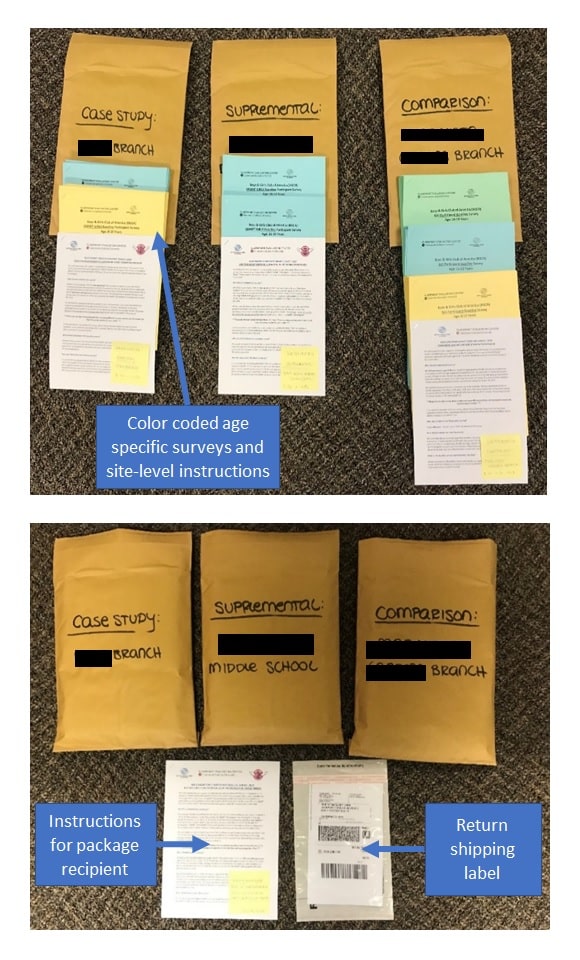

Paper Survey Strategies: Because available technology at Clubs varied, a paper survey was used to survey SMART Girls program participants at their Clubs. The YDEval team mailed packages of paper surveys to the 18 Organizations to distribute at their three Clubs at two time points (pre-test and post-test). Additionally, there were six distinct versions of the survey, one age-appropriate version for each of the three SMART Girls age groups and two separate surveys for SMART Girls Clubs (treatment) and comparison Clubs. For this reason, paper survey preparation, organization, and shipping were not simple tasks. We employed a number of strategies to alleviate the burden of paper data collection on Clubs:

- Intentional Shipping:

- Organizations were mailed a box with 3 separate envelopes (one for each Club), instructions for the point-person, and a return label (surveys could be returned in the same box). Pre-test surveys and post-surveys were mailed separately: pre-test surveys before the program and post-test surveys after the program (based on coordination with the point person).

- Each envelope was labelled with the name of the site it was meant to be delivered to.

- The enveloped were filled with the appropriate surveys for each site along with a letter of instructions for the site-level staff and a roster of youth participants who needed to complete the survey.

- Colored Paper: Some Clubs implemented SMART Girls with multiple age groups and therefore required separate versions of the surveys. Age-specific surveys were differentiated by different colored paper and given clear titles with the ages to signify that the surveys were different and needed to go to different groups of participants.

3. Survey Labels: For post-test surveys, the paper surveys were pre-labeled with the names of participants who had completed the pre-test survey. This strategy was used to increase the likelihood that we would receive both pre-test and post-test data from the same individuals.

4. Communication: An email template was developed to email the point-person with a description of package contents, a copy of instructions, and the tracking number for the package each time surveys were mailed. Survey shipment dates, tracking numbers, and packet information were tracked by the YDEval team in the Google Spreadsheet.

Strategies Used After Data Collection

In appreciation of the time and effort given by the participating Clubs, it was important that we gave back to them. Beyond financial incentives, the Clubs wanted to learn from the evaluation too. Beyond the full comprehensive report, the YDEval team delivered: (1) Organization-specific reports, (2) reporting materials that summarized the study as a whole, and a standalone executive summary from the full report. A brief description of the deliverables is included below:

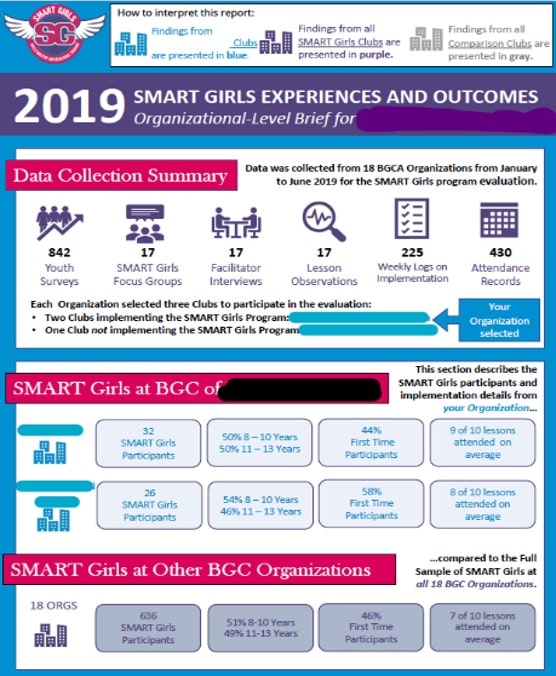

- Organization-Level Reports: Four-page Organization level briefs were created for each of the 18 Organizations in our study. The briefs summarized the findings from the three Clubs that participated in the evaluation (treatment and comparison Clubs), including information about data collection efforts, noted strengths and opportunities for growth for staff practices, and participant survey results, with comparisons to their non-participating Clubs and the full sample. Quotes from their youth participants were included when possible to share the voices of those who experienced the program.

2. Presentation Slide Decks: Comprehensive and condensed versions of a slide deck were developed to summarize findings from the evaluation. The condensed slide deck described the overarching findings of the evaluation, while the comprehensive slide deck incorporated figures that showed notable data points. This allowed BGCA to tailor presentation of the evaluation findings to their intended audiences.

3. Executive Summary: Although we worked hard to develop a comprehensive evaluation report, we do not assume that everyone in the Organization (or outside) has the time or bandwidth to engage in the full report. Because of this, we created a stand-alone executive summary that could be separated from the full report and shared throughout the Organization and beyond (at their discretion). The executive summary summarized the evaluation purpose, methods, and findings, transforming a 100-page report into an 8-page summary.

Due to the intentional planning and strategy employed throughout the duration of the evaluation study, we were able to collect sufficient levels of data to supply BGCA with rich and rigorous findings to enhance their understanding of the impact of the SMART Girls program and improve their program to better support their staff to serve their youth. To summarize, our main strategies included the following:

- Strategic recruitment and selection of Clubs to participate in the evaluation

- Building relationships with staff through clear and consistent communication

- Incentivizing participation in multiple ways

- Adapting to needs of Clubs and being flexible when possible

- Minimizing the burden of data collection for site-based teams

- Providing tailored and simple reporting to be used for a variety of audiences